This is an old revision of the document!

Converting a single disk Ubuntu system to RAID1 (adding a second disk)

Created for Phil, using Ubuntu 9.10 Karmic Koala

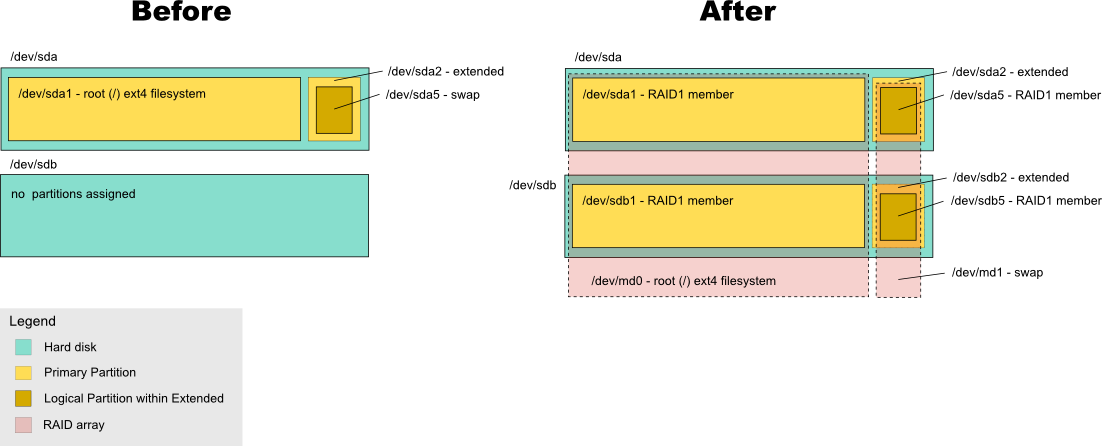

This Procedure will take a standard install of Ubuntu, and mirror it to a spare hard disk, forming a RAID-1 array. Ubuntu by default on a standard install has 2 partitions. One for the root (/) filesystem and one for swap space. This procedure sets up individual raid arrays for each of these (root filesystem and swap). This procedure makes heavy use of the linux shell (terminal). In Ubuntu you can load a terminal from the Applications menu, under Accessories.

1. Determine device names to be used in the procedure.

The layout of drives within the system can be confirmed by typing the following into a terminal:

cat /proc/partitions

In linux, drives are referenced by drive letter, and partitions on that drive are referenced by a number after the letter. For example, the second partition of the third disk can be accessed via /dev/hdc2. Make doubly sure you know which drive is your “first drive” (which we will be mirroring from) and which drive is your “second drive” (the spare one which we will be mirroring to). In this procedure, we will assume that your first hard disk is /dev/sda and your second hard disk is /dev/sdb.

2. Make sure the newly added drive is completely blank

For this we will use the fdisk utility.

sudo fdisk /dev/sdb

Familiarise yourself with this useful utility. The commands we will be using are:

- m - Print help screen (shows all commands possible)

- p - Print partition table (to screen)

- d - Delete partition

- n - Add a new partition

- t - Change partition “type” label

- q - Quit without saving

- w - Write table to disk and quit.

Using a combination of the “p” and “d” commands, make sure that ALL partition are deleted from /dev/sdb. It should look something like this:

Command (m for help): p Disk /dev/sdb: 40.1 GB, 40020664320 bytes 255 heads, 63 sectors/track, 4865 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0xeea1cf79 Device Boot Start End Blocks Id System Command (m for help):

Once all partitions are deleted, use the “w” command to write (save) the partition table and quit.

3. Grab the partition sizes from the first disk

Now we are going to use fdisk to note the exact partition layout of the first disk. Type the following

sudo fdisk /dev/sda

Use the p command to print the partition table. This should look like the following:

Command (m for help): p Disk /dev/sda: 40.0 GB, 40020664320 bytes 255 heads, 63 sectors/track, 4865 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0xdcf4dcf4 Device Boot Start End Blocks Id System /dev/sda1 * 1 4679 37584036 83 Linux /dev/sda2 4680 4865 1494045 5 Extended /dev/sda5 4680 4865 1494013+ 82 Linux swap / Solaris

Use the q command to quit without saving. The above is an example only. Write down or take note of the partition layout and exact start and end of each partition. In the above example, partition 1 and 2 are primary partitions. The second primary partition is of “extended” type, which contains extended partitions. Partition5 is “logical” partition within partition 2.

4. Partition the second disk identically, with "linux raid autodetect" type labels

In this step, we will partition the second drive (/dev/sdb) to be identical in partition layout to the first drive (/dev/sda). Type the following to start the fdisk utility on /dev/sdb.

sudo fdisk /dev/sdb

Now use the “n” command to create partitions identical to the ones noted in the previous step on /dev/sda. The following is an example only, which uses figures from the example in the previous step.

Command (m for help): n

Command action

e extended

p primary partition (1-4)

p

Partition number (1-4): 1

First cylinder (1-4865, default 1):

Using default value 1

Last cylinder, +cylinders or +size{K,M,G} (1-4865, default 4865): 4679

Command (m for help): n

Command action

e extended

p primary partition (1-4)

e

Partition number (1-4): 2

First cylinder (4680-4865, default 4680):

Using default value 4680

Last cylinder, +cylinders or +size{K,M,G} (4680-4865, default 4865):

Using default value 4865

Command (m for help): n

Command action

l logical (5 or over)

p primary partition (1-4)

l

First cylinder (4680-4865, default 4680):

Using default value 4680

Last cylinder, +cylinders or +size{K,M,G} (4680-4865, default 4865):

Using default value 4865

If you like, now use the p command to see what you've set up. The start and End and Blocks should be identical to the first drive. We will now use the “t” and “a” commands to set the partition types of the newly created partitions to be “linux raid autodetect” as they will be array members, and to set partition1 as bootable.

Command (m for help): t Partition number (1-5): 1 Hex code (type L to list codes): fd Command (m for help): t Partition number (1-5): 5 Hex code (type L to list codes): fd Changed system type of partition 5 to 82 (Linux swap / Solaris) Command (m for help): a Partition number (1-5): 1

Now, use the “p” command, to confirm that the partition layout is identical to the first drive, as per the following example:

Command (m for help): p Disk /dev/sdb: 40.0 GB, 40020664320 bytes 255 heads, 63 sectors/track, 4865 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0x252e095a Device Boot Start End Blocks Id System /dev/sdb1 * 1 4679 37584036 fd Linux raid autodetect /dev/sdb2 4680 4865 1494045 5 Extended /dev/sdb5 4680 4865 1494013+ fd Linux raid autodetect

Finally, use the “w” command to write the partition layout to disk, and quit.

Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. Syncing disks.

5. Create degraded RAID-1 arrays on the second disk

In this step, the mdadm utility will be required. The mdadm utility is the main tool for manipulating raid systems on modern linux based systems. Type “mdadm” in your terminal to see if it is already installed. If not, use the following command to download and install mdadm:

sudo apt-get install mdadm

The apt-get utility will give some stats on what it's about to do, which you'll need to confirm with “Y”. If you haven't already configured an email system on the machine, you'll also be prompted to configure postfix. Choose “Satellite system” and provide an smtp server for it to use. This can then be configured to send you an email if a RAID array fails in the system. Once this has been done, we can proceed to create our raid arrays in degraded mode. “Degraded” mode means that one drive is missing from the array, and it is currently has no level of redundancy. Here are the commands required to create our RAID arrays, for the root filesystem and the swap area, using the second disk only.

sudo mdadm --create /dev/md0 -l 1 -n 2 /dev/sdb1 missing sudo mdadm --create /dev/md1 -l 1 -n 2 /dev/sdb5 missing

Note the “missing” keyword. This tells mdadm to start the array, even though it only has one device (aka “degraded”). The “-l 1” sets it to RAID level 1 (mirroring) and the “-n 2” tells it there will be 2 devices in the array. The devices are “/dev/sdb2” and “missing”.

6. Update RAID UUIDs in config file (mdadm.conf) and create new initramfs.

In this step we will edit the RAID config file to tell it in the bootup procedure that it needs to assemble a software raid array. First we need to find the UUIDs (unique identifier IDs) of the raid arrays.

sudo mdadm --query --detail /dev/md0 | grep UUID

Repeat for /dev/md1:

sudo mdadm --query --detail /dev/md1 | grep UUID

Open a new terminal window and type the following:

sudo nano /etc/mdadm/mdadm.conf

Nano is a simple text editor. Navigate down to the comment “#definitions of existing MD arrays” and insert under it a couple of ARRAY lines as per the following example. The UUIDs in the example are examples only. Copy and paste the UUIDs from the other terminal window you have open, into your ARRAY lines:

# definitions of existing MD arrays ARRAY /dev/md0 uuid=997fffd8:96fc292b:9cfa26d1:6098b1cf ARRAY /dev/md1 uuid=02b854fd:6358775f:9cfa26d1:6098b1cf

Ensure that you've copy and pasted the RAID UUIDs precicely, and that you haven't missed any characters. Press CTRL+X to save the file and exit the nano text editor. We now run the following command to compile the initramfs - which is one of the first things that loads when you boot your pc (just after the kernel is loaded into memory)

sudo update-initramfs -u

7. Format the new arrays

The first array (/dev/md0) will be formatted as ext4 filesystem, and the second array (/dev/md1) will be formatted as swap space. Here are the commands:

sudo mkfs.ext4 /dev/md0 sudo tune2fs -c0 -i0 /dev/md0 sudo mkswap /dev/md1

The tune2fs command sets a couple of parameters on the filesystem (turns off “checking” it every 30 boots or 180 days)

8. Mount the new root array to a mountpoint

We now need to mount the newly created ext4 filesystem in /dev/md0 to a mountpoint (which means we first need to make a mountpoint). A mountpoint is a location in which a filesystem is attached, very similar to the “drive letter” concept in windows. The following commands create a mountpoint, and mount the new empty fileystem we created in the prevous step.

cd /mnt sudo mkdir temp-newroot sudo mount /dev/md0 temp-newroot

Type “df -h” to see the disk space free, confirming the array is mounted correctly, as per the following example:

/mnt$ df -h Filesystem Size Used Avail Use% Mounted on /dev/sda1 36G 2.9G 31G 9% / udev 249M 252K 249M 1% /dev none 249M 188K 249M 1% /dev/shm none 249M 112K 249M 1% /var/run none 249M 0 249M 0% /var/lock none 249M 0 249M 0% /lib/init/rw /dev/md0 36G 177M 34G 1% /mnt/temp-newroot

9. Bind-mount the old partition to a mountpoint

To help in the copy process (so we dont have recursive directories) we are going to bind-mount the current root filesystem to a new mountpoint, which we will first make. This step assumes we are still working in the /mnt directory (we changed to it in the prevous step).

sudo mkdir temp-oldroot sudo mount -o bind / temp-oldroot

You can confirm this has worked by typing “ls /mnt/temp-oldroot” and confirming it looks the same as “ls /”

10. Copy the data from old root partition to new root array.

Here's the command to copy data from the old (current) root filesystem to the new empty root filesystem on the RAID-1 array (/dev/md0). This assumes our current working directory is still /mnt. You probably want to close any open programs as much as possible before you copy all the files.

sudo cp -d -p -R -v temp-oldroot/* temp-newroot

You should see a verbose display of files (thats what the -v is) copied. Wait for this to finish.

11. Adjust filesystem UUIDs in /etc/fstab to point to the new arrays

We now need to edit the linux file system table (fstab) in the NEW filesystem on the RAID array, so that when the system boots up, it will be mounting the RAID array (/dev/md0) and not the first hard disk (/dev/sda1) as the root filesystem (/).

First, open a new terminal window, and use the following commands to find the UUID (universally unique identifier) values for the new root filesystem (/dev/md0) and the new swap area (/dev/md1):

sudo blkid /dev/md0 sudo blkid /dev/md1

Now go back to the original terminal window and type: sudo nano /mnt/temp-newroot/etc/fstab </code> Navigate down to the first “UUID=” line. It will look similar (but not identical - remember it's a “unique” identifier) to the following (note the single / after the UUID, signifying this is for the root filesystem):

UUID=470118f4-59ec-466a-b8d4-ed46345b1aae / ext4 errors=remount-ro 0 1

Now. Edit this line so that the UUID points to the UUID of the new RAID array, by doing the following:

- Backspace the UUID all the way back to the equals sign

- Go to the terminal window where you ran the blkid commands.

- Highlight and copy the UUID value for the /dev/md0 array to the clipboard

- Back to the fstab terminal, and paste the UUID.

- Confirm it's the right number of characters, and that you didnt' accidentally miss one.

- Repeat these steps for the new swap array (/dev/md1)

Use CTRL+W to save the fstab and exit nano text file editor.

12. Adjust UUID in /boot/grub/menu.lst to point to the new arrays

This step will cause the bootloader to load up using the raid array (/dev/md0) instead of the old drive (/dev/sda1). This change is only temporary, as we are editting the file on the OLD root partition. We will fix this to be permanent once we reboot into the new system.

sudo nano /boot/grub/grub.cfg

Look for the section that refers to the first boot menu entry. You're looking for a couple of lines that look like this:

search --no-floppy --fs-uuid --set 470118f4-59ec-466a-b8d4-ed46345b1aae

linux /boot/vmlinuz-2.6.31-14-generic root=UUID=470118f4-59ec-466a-b8d4-ed46345b1aae ro quiet splash

Replace the both the UUID's on those lines with the UUID for /dev/md0, as descovered in the previous steps, then save the file and exit with CTRL+X

13. Unmount filesystems, reboot into new root array.

We are now ready to unmount the new filesystem and reboot into it. Run this:

sudo umount /mnt/temp-oldroot sudo umount /mnt/temp-newroot sudo reboot

Which should, if you've done everything correctly, reboot into the new system. Once booted, confirm you're running from the new RAID-1 array (/dev/md0) by going to a terminal and typing “df -h” and noting the root filesystem line should be pointing at /dev/md0:

~$ df -h Filesystem Size Used Avail Use% Mounted on /dev/md0 36G 2.8G 31G 9% / udev 249M 252K 249M 1% /dev none 249M 136K 249M 1% /dev/shm none 249M 116K 249M 1% /var/run none 249M 0 249M 0% /var/lock none 249M 0 249M 0% /lib/init/rw

By typing "cat /proc/swaps" you should see /dev/md1 as the only swap area: ~$ cat /proc/swaps Filename Type Size Used Priority /dev/md1 partition 1493880 0 -1

14. Change partition type labels on first drive to "linux raid autodetect"

Almost There! Now that we are successfully booted up using the new array, we need to convert the old disk (/dev/sda) to become part of the RAID1 arrays, making them redundant. First of all, use fdisk to change the partition types to “Linux raid autodetect”

sudo fdisk /dev/sda

Using the fdisk commands (which should be familiar by now), change the partition types labels, then save and exit:

Command (m for help): t Partition number (1-5): 1 Hex code (type L to list codes): fd Changed system type of partition 1 to fd (Linux raid autodetect) Command (m for help): t Partition number (1-5): 5 Hex code (type L to list codes): fd Changed system type of partition 5 to fd (Linux raid autodetect) Command (m for help): p Disk /dev/sda: 40.0 GB, 40020664320 bytes 255 heads, 63 sectors/track, 4865 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Disk identifier: 0xdcf4dcf4 Device Boot Start End Blocks Id System /dev/sda1 * 1 4679 37584036 fd Linux raid autodetect /dev/sda2 4680 4865 1494045 5 Extended /dev/sda5 4680 4865 1494013+ fd Linux raid autodetect Command (m for help): w The partition table has been altered! Calling ioctl() to re-read partition table. WARNING: Re-reading the partition table failed with error 16: Device or resource busy. The kernel still uses the old table. The new table will be used at the next reboot or after you run partprobe(8) or kpartx(8) Syncing disks.

15. Add old drive root partition to the new root array - making it redundant.

This is a one-liner:

sudo mdadm --manage /dev/md0 --add /dev/sda1

16. Add the second member (first drive) of the swap array, making it redundant.

Also a one-liner:

sudo mdadm --manage /dev/md1 --add /dev/sda2

17. Watch some rebuild stats. Dont forget the important last step.

Type “cat /proc/mdstat” to get an idea of how the resync of both RAID-1 arrays is going. You can run this command as many times as you like, to monitor the progress. At the end of the resync, both arrays should show “[UU]” instead of “[U_]”

$ cat /proc/mdstat

Personalities : [raid1] [linear] [multipath] [raid0] [raid6] [raid5] [raid4] [raid10]

md1 : active raid1 sda5[2] sdb5[0]

1493888 blocks [2/1] [U_]

resync=DELAYED

md0 : active raid1 sda1[2] sdb1[0]

37583936 blocks [2/1] [U_]

[>....................] recovery = 1.1% (415488/37583936) finish=35.7min speed=17312K/sec

unused devices: <none>

Wait for the raid sync to completely finish before going on to the next step

18. Final update to the bootloader to account for the first drive being changed.

Type this to finalise the bootloader config and Master Boot Record on both hard disks:

sudo update-grub sudo grub-install /dev/sda sudo grub-install /dev/sdb

ALL DONE!!